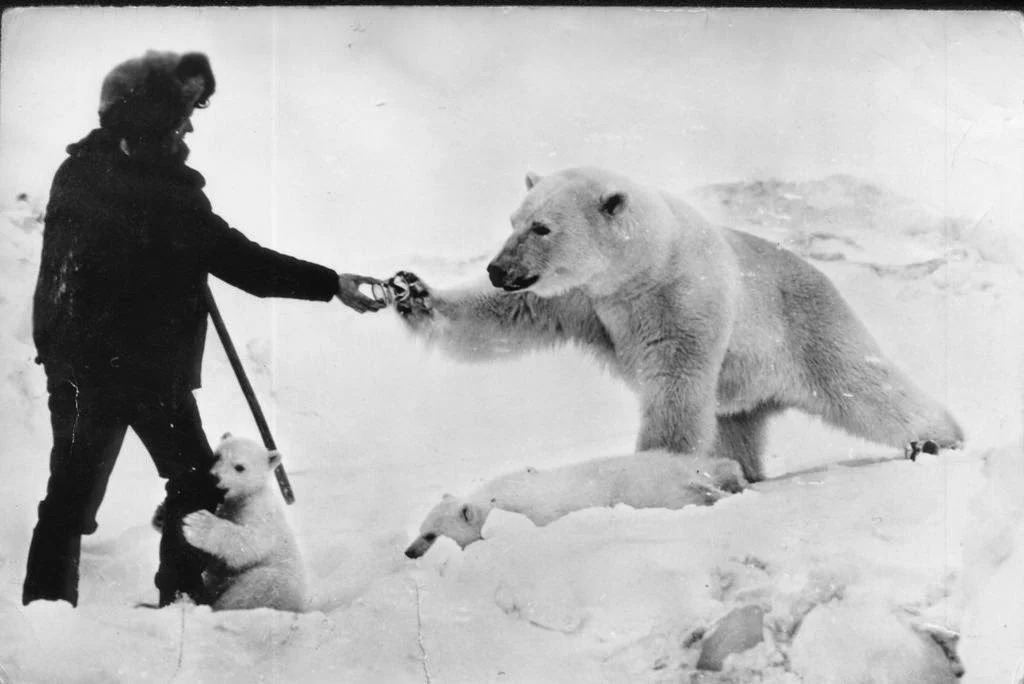

Photo: Unknown / Soviet Army

Last week, PetaPixel published an article featuring photos of Soviet Union soldiers hand-feeding polar bears. You wouldn’t believe it if you didn’t see it, yet there was photographic evidence from the 1950s showing that humans did in fact interact with polar bears without becoming their latest meal. Still, many readers weren’t convinced the images were authentic.

Generative AI Causing Doubt

That scepticism makes sense. Anyone who has watched a documentary on polar bears knows they are among the most dangerous threats to humans. They will hunt if you are in their vicinity, and unless you are armed, your chances of survival are slim.

If the soldiers had told this as a verbal story, few would have believed them. But as recently as four years ago, the images alone would have been enough to ease doubts. That is no longer the case. Generative AI has trained us to second-guess what we are actually seeing.

“AI slop,” wrote one reader. “And we believe all of this, too,” said another. It is fair to doubt how genuine images are, especially when AI visuals are constantly fooling people, such as during the 2024 MET Gala. The creator of the images is also unknown, adding further scepticism.

In this case, however, the images are real. An article featuring them, with comments dating back to 2014, still exists. This was long before generative AI flooded the internet.

Fighting Against Generative AI

Over the past few years, creative and honest photographers have increasingly had to defend their work. In 2024, I interviewed Nina Nayko, a Georgian photographer known for her conceptual imagery. We spoke about the harms of generative AI, to which she told me, “…my animations are very dependent on digital editing and are surrealistic. People assume it’s generated with AI tools, when it’s actually my work that AI steals from.”

Photo: Nina Nayko.

When researching photographers today, I often see “Not AI” included in their bios. This is only going to become more common as AI-generated images continue to blend seamlessly into our feeds and news platforms.

Photographers are being forced to defend their work when they shouldn’t need to, and people are starting to question their talent and effort. At best, this creates frustration and conflict. At worst, it leads to many photographers asking themselves, “what’s the point in creating anymore?”

The Solution…

The photography industry is attempting to respond, but it still has a long way to go. Content credentials are currently offered by Adobe and supported by some social media platforms. Camera manufacturers like Leica, Fujifilm, and Nikon have begun integrating content credentials into certain models, but not across the board.

Even then, the system relies on a sceptical viewer manually uploading an image to check whether those credentials are present. Realistically, most people on the internet will never take that step.

The most extreme solution would be to outlaw generative AI entirely. While this would come with clear benefits, the idea of lawmakers restricting access to creative tools, especially ones that can have legitimate uses, does not sit comfortably with me.

A more realistic approach may be for the best photo editing software and camera manufacturers to add a small authenticity logo to every verified image. It is far from ideal, particularly for those who feel logos and watermarks detract from the beauty of a photograph.

But this is the world we now live in. A solution like this may be the lesser of several evils. Whatever path is chosen, something clearly needs to be done to help protect photography, and the pleasure and trust that comes from viewing human-made imagery.

More reading: My Photos Suck, I Wish They Were Yours

Want your work featured on Them Frames? Pitch us.